- 4 Posts

- 200 Comments

1·7 days ago

1·7 days agoYeah, but they encourage confining it to a virtual machine with limited access.

5·7 days ago

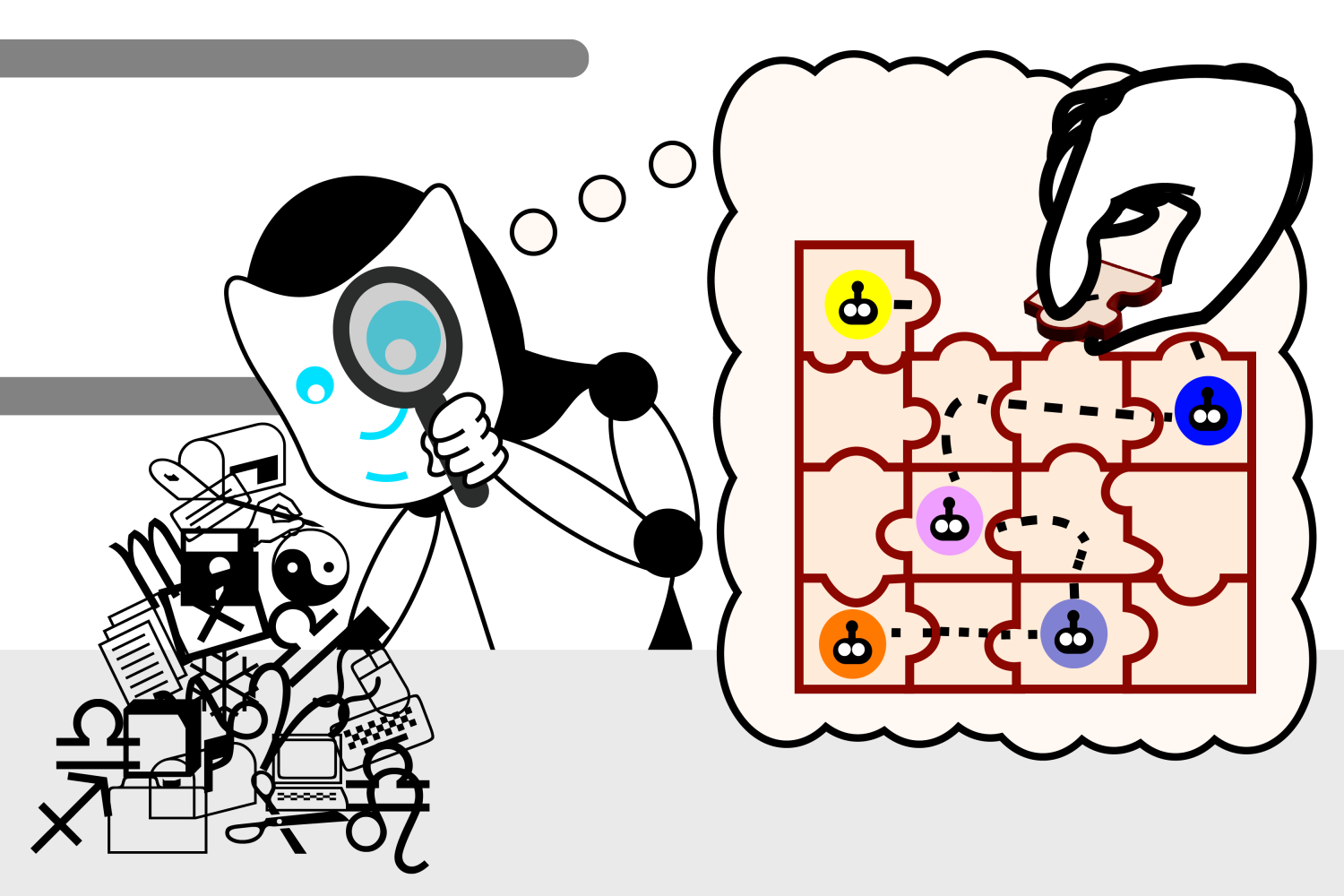

5·7 days agoLogic and Path-finding?

52·7 days ago

52·7 days agodeleted by creator

389·9 days ago

389·9 days agoShithole country.

3·11 days ago

3·11 days agoYeah, using image recognition on a screenshot of the desktop and directing a mouse around the screen with coordinates is definitely an intermediate implementation. Open Interpreter, Shell-GPT, LLM-Shell, and DemandGen make a little more sense to me for anything that can currently be done from a CLI, but I’ve never actually tested em.

71·11 days ago

71·11 days agoI was watching users test this out and am generally impressed. At one point, Claude tried to open Firefox, but it was not responding. So it killed the process from the console and restarted. A small thing, but not something I would have expected it to overcome this early. It’s clearly not ready for prime time (by their repeated warnings), but I’m happy to see these capabilities finally making it to a foundation model’s API. It’ll be interesting to see how much remains of GUIs (or high level programming languages for that matter) if/when AI can reliably translate common language to hardware behavior.

Can I blame Trump on 9/11 or something?

112·11 days ago

112·11 days agoWow, that’s… somethin. I haven’t paid any attention to Character AI. I assumed they were using one of the foundation models, but nope. Turns out they trained their own. And they just licensed it to Google. Oh, I bet that’s what drives the generated podcasts in Notebook LM now. Anyway, that’s some fucked up alignment right there. I’m hip deep in the stuff, and I’ve never seen a model act like this.

413·11 days ago

413·11 days agoHe ostensibly killed himself to be with Daenerys Targaryen in death. This is sad on so many levels, but yeah… parenting. Character .AI may have only gone 17+ in July, but Game of Thrones was always TV-MA.

Aren’t they in Macy’s now? Wait, is Macy’s still a thing?

ÆLON is totally what he’s gonna call his AI clone.

In its latest audit of 10 leading chatbots, compiled in September, NewsGuard found that AI will repeat misinformation 18% of the time

70% of the instances where AI repeated falsehoods were in response to bad actor prompts, as opposed to leading prompts or innocent user prompts.

28·13 days ago

28·13 days agoTo be clear, it’ll be 10-30 years before AI displaces all human jobs.

112·2 months ago

112·2 months agoan eight-year-old girl was among those killed

4·2 months ago

4·2 months agoHop on Adobe stock right now and search for something. Half of the results will be AI-generated. There’s a search filter that can exclude them.

deleted by creator

351·2 months ago

351·2 months agoCovfefe

Just for fun, this can be accomplished with a poorly shielded speaker/audio cable next to a poorly shielded CRT/monitor cable displaying a locally run LLM. And it will make you feel like a hax0r